Speech To Text Feature with Flutter

A simple demonstration of speech to text feature using the speech_to_text third party plug-in in Flutter.

Recently I was working on an application which needed the speech-to-text feature. In order to implement what I had in mind, I had to search on the related packages in Flutter. I came across three; speech_to_text, speech_recognition and google_speech. Of the three, I decided to go with the speech_to_text plugin, since I was working on a simple case. Here’s the link to the plugin on pub.dev: speech_to_text.

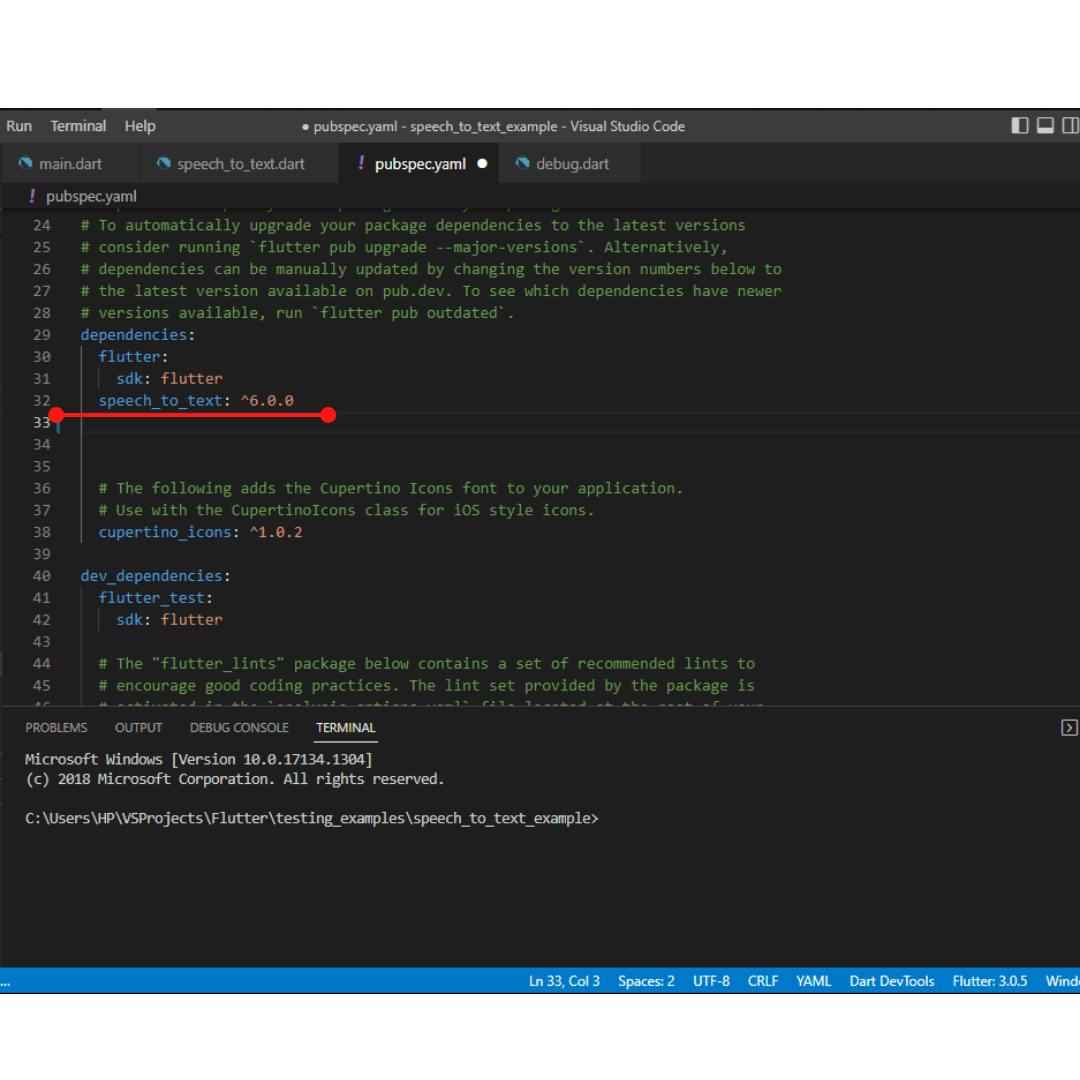

Below I described the process it took me in setting up the plugin and utilizing it. To make use of the application, you will need to add it to dependencies in the pubspec.yaml file, and then run flutter pub get after saving your changes:

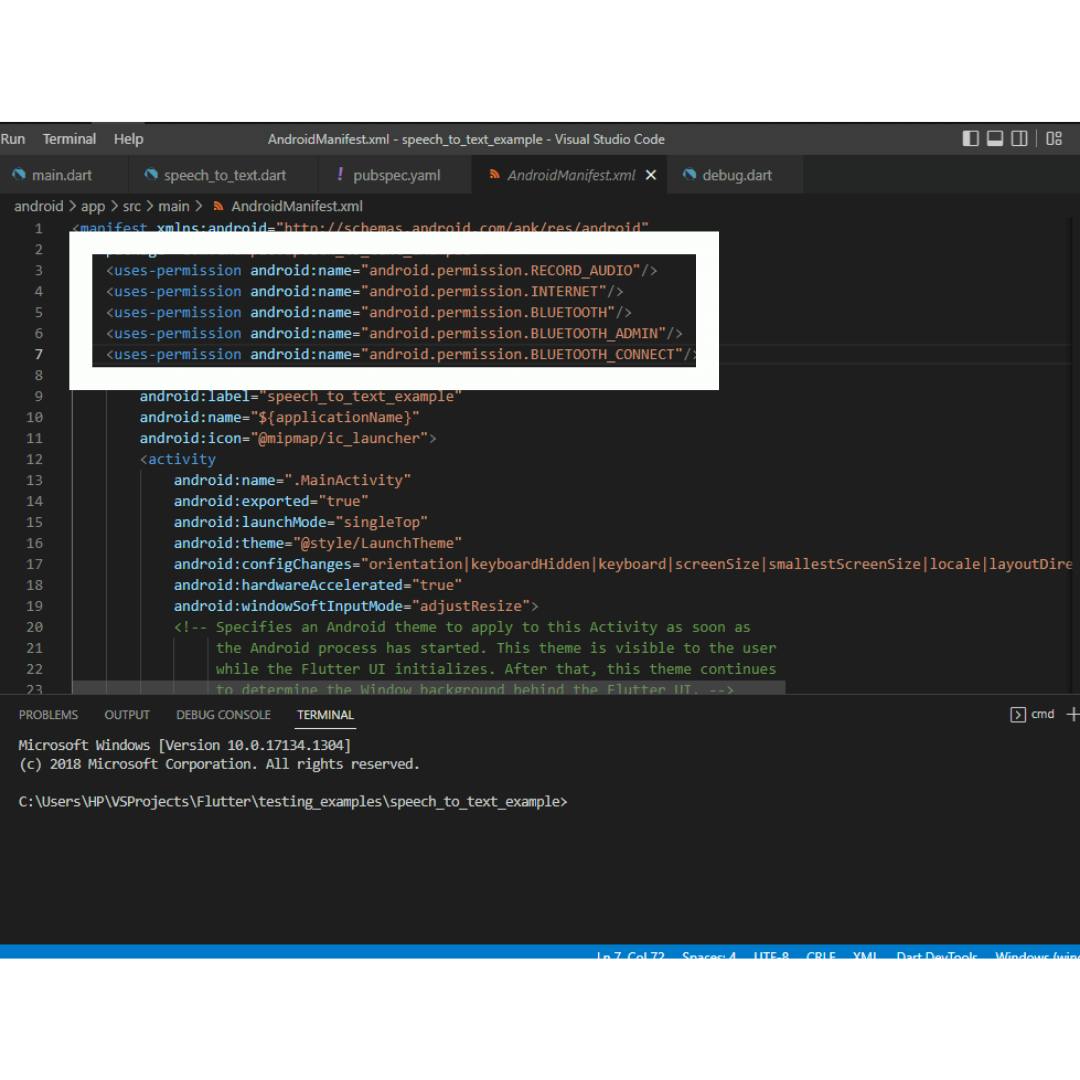

Also, the plugin requires permission to make use of audio, bluetooth (for integrated usage), bluetooth admin, and internet. To make use of the microphone and others, you’ll need to add the required permission by opening the AndroidManifest.xml file in android/app/src/main/AndroidManifest.xml and add them just below manifest and before applications like this:

Also, the plugin requires permission to make use of audio, bluetooth (for integrated usage), bluetooth admin, and internet. To make use of the microphone and others, you’ll need to add the required permission by opening the AndroidManifest.xml file in android/app/src/main/AndroidManifest.xml and add them just below manifest and before applications like this:

Then import it to your code and make instances and references to it, for example like the below snippet:

(Note: This is not the complete code. Link to the complete code repo is added at the end of this article)

Then import it to your code and make instances and references to it, for example like the below snippet:

(Note: This is not the complete code. Link to the complete code repo is added at the end of this article)

import 'package:flutter/material.dart';

import 'package:speech_to_text/speech_recognition_result.dart';

import 'package:speech_to_text/speech_to_text.dart';

class OutlineButtonWidget extends StatelessWidget {

const OutlineButtonWidget({Key? key}) : super(key: key);

@override

Widget build(BuildContext context) {

return OutlinedButton(

child: const Text(

"Click",

style: TextStyle(

fontWeight: FontWeight.bold,

fontSize: 15,

),

),

onPressed: () {

showDialog<void>(

context: context,

builder: (context) {

return const SpeechContainer();

},

);

},

);

}

}

class SpeechContainer extends StatefulWidget {

const SpeechContainer({Key? key}) : super(key: key);

@override

State<SpeechContainer> createState() => _SpeechContainerState();

}

class _SpeechContainerState extends State<SpeechContainer> {

final SpeechToText _speechToText = SpeechToText();

bool _speechEnabled = false;

String _lastWords = "";

@override

void initState() {

super.initState();

_initSpeech();

}

void _initSpeech() async {

_speechEnabled = await _speechToText.initialize(debugLogging: true);

setState(() {});

}

void _startListening() async {

_lastWords = "";

await _speechToText.listen(onResult: _onSpeechResult);

setState(() {});

}

void _stopListening() async {

await _speechToText.stop();

setState(() {});

}

void _onSpeechResult(SpeechRecognitionResult result) {

setState(

() {

_lastWords = result.recognizedWords;

},

);

}

@override

Widget build(BuildContext context) {

return Center(

child: Container(

width: MediaQuery.of(context).size.width - 10,

height: MediaQuery.of(context).size.height / 2.5,

color: Colors.white,

alignment: Alignment.center,

child: Column(

mainAxisAlignment: MainAxisAlignment.spaceBetween,

children: <Widget>[

const SizedBox(

height: 10,

),

const Text(

"Speech To Text",

style: TextStyle(

fontWeight: FontWeight.bold,

fontSize: 30,

),

),

const SizedBox(

height: 10,

),

TextButton(

child: _speechToText.isNotListening

? const Icon(

Icons.mic,

size: 50,

)

: const Icon(

Icons.mic_off,

size: 50,

),

onPressed: _speechToText.isNotListening

? _startListening

: _stopListening,

),

const SizedBox(

height: 10,

),

Text(

_speechToText.isListening

? _lastWords

: _speechEnabled

? "Try saying something"

: "Didn't catch that. Try speaking again.",

style: const TextStyle(

fontSize: 17,

),

),

_speechToText.isListening

? const SizedBox()

: OutlinedButton(

child: const Text("Try Again"),

style: OutlinedButton.styleFrom(

onSurface: Colors.blue,

textStyle: const TextStyle(fontSize: 12),

),

onPressed: () {}),

const SizedBox(

height: 10,

),

],

),

),

);

}

}

On running the complete speech_to_text_example code, you get this view as can be seen below:

(Note: The 'view' is a gif file)

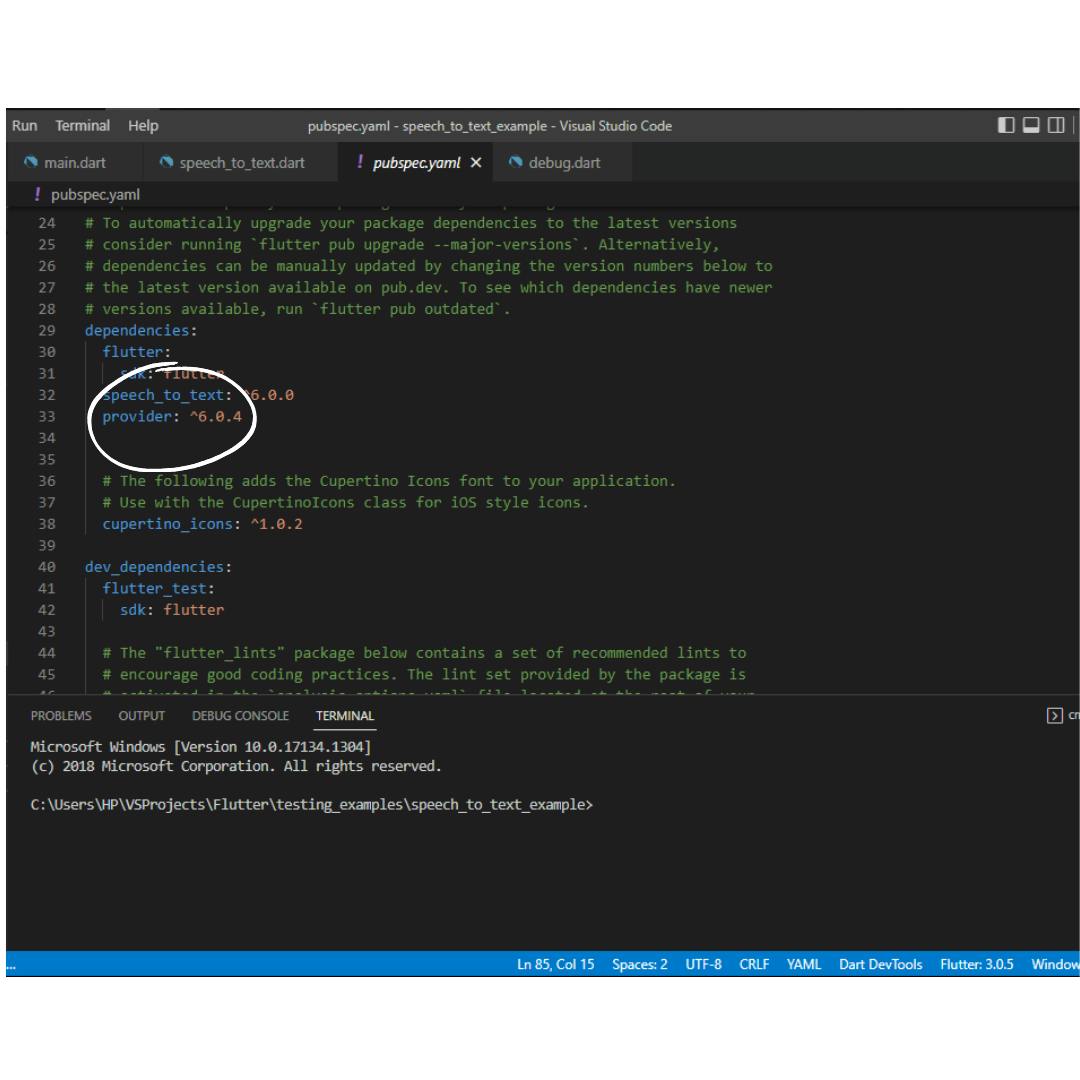

As was specified on the application guide in pub.dev, you only need to instantiate the SpeechToText class once. So, in order to use it in multiple widgets, you’ll need to make use of the SpeechToTextProvider class, which requires adding provider as dependency in your pubspec.yaml file as can be seen below:

As was specified on the application guide in pub.dev, you only need to instantiate the SpeechToText class once. So, in order to use it in multiple widgets, you’ll need to make use of the SpeechToTextProvider class, which requires adding provider as dependency in your pubspec.yaml file as can be seen below:

Then making instances to it as you’d like, in this aspect I created different widgets and different screens to demonstrate how the speech_to_text_provider recognized words can be shared between different screens (and widgets).

Then making instances to it as you’d like, in this aspect I created different widgets and different screens to demonstrate how the speech_to_text_provider recognized words can be shared between different screens (and widgets).

import "package:flutter/material.dart";

import "package:provider/provider.dart";

import "package:speech_to_text/speech_to_text_provider.dart";

class MicrophoneScreen extends StatelessWidget {

const MicrophoneScreen({Key? key}) : super(key: key);

@override

Widget build(BuildContext context) {

var speechProvider = Provider.of<SpeechToTextProvider>(context);

return Scaffold(

backgroundColor: Colors.black54,

body: SafeArea(

child: Center(

child: Container(

color: Colors.white,

width: MediaQuery.of(context).size.width - 10,

height: MediaQuery.of(context).size.height / 2.5,

child: Column(

crossAxisAlignment: CrossAxisAlignment.center,

mainAxisAlignment: MainAxisAlignment.spaceBetween,

children: <Widget>[

const SizedBox(

height: 10,

),

const Text(

"Speech Provider",

style: TextStyle(

fontWeight: FontWeight.bold,

fontSize: 30,

),

),

const SizedBox(

height: 10,

),

TextButton(

child: speechProvider.isNotListening

? const Icon(

Icons.mic,

size: 50,

)

: const Icon(

Icons.mic_off,

size: 50,

),

onPressed: speechProvider.isNotListening

? speechProvider.listen

: speechProvider.stop,

),

const SizedBox(

height: 10,

),

speechProvider.isListening

? Text(speechProvider.lastResult?.recognizedWords ?? "")

: const Text(

"Try saying something",

style: TextStyle(

fontSize: 16,

),

),

speechProvider.isListening

? const SizedBox()

: OutlinedButton(

child: const Text("Try Again"),

style: OutlinedButton.styleFrom(

onSurface: Colors.blue,

textStyle: const TextStyle(fontSize: 12),

),

onPressed: () {},

),

const SizedBox(

height: 10,

)

],

),

),

),

),

);

}

}

On running the complete speech_to_text_provider_example code, you get this view as can be seen below:

Issue encountered

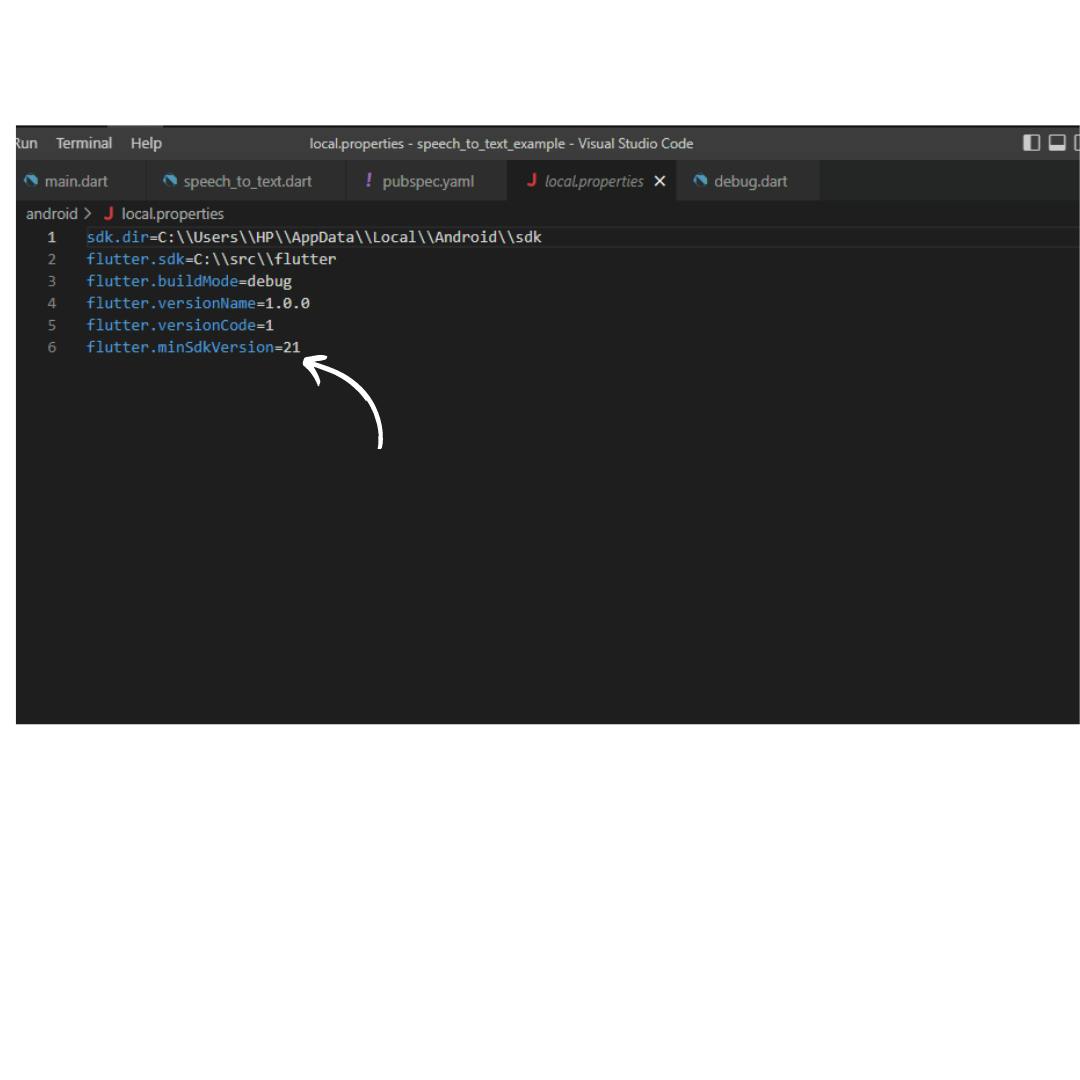

The issue I encountered while using the package was, android minSDKVersion error. To solve that, you’ll need to upgrade the minimum version of the SDK from 16 to 21, this is due to the fact that the plugin makes use of a minimum version of 21. To upgrade the minSdkVersion for versions of Flutter higher than 2.8.0, you’ll navigate to the local.properties folder in android, then add the line flutter.minSdkVersion=21, like this:

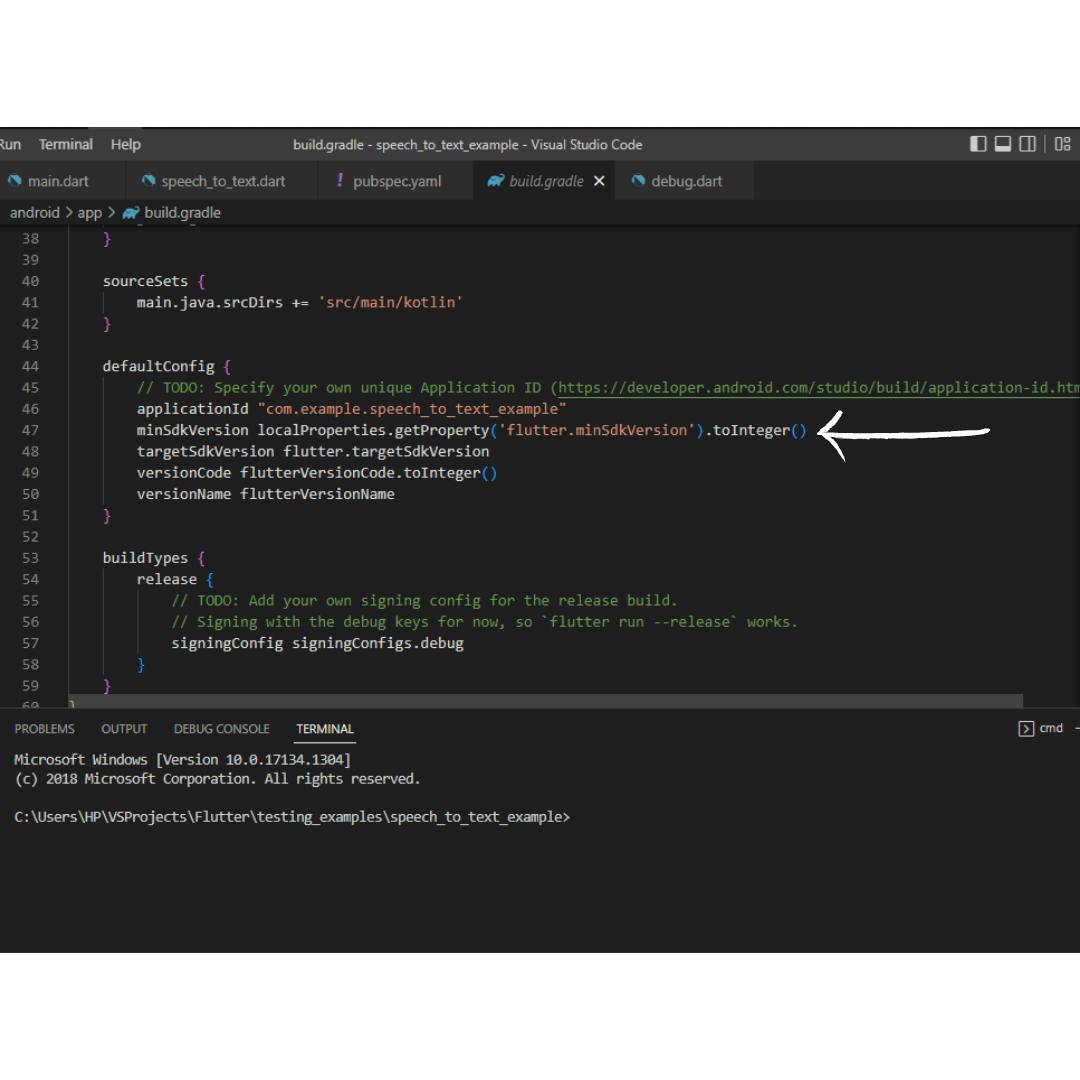

Then reference the minSDKversion in android/app/build.gradle below the defaultConfig block by changing the minSdkVersion line from flutter.minSdkVersion to local.properties(‘flutter.minSdkVersion’).toInterger() just like in the image below:

Then reference the minSDKversion in android/app/build.gradle below the defaultConfig block by changing the minSdkVersion line from flutter.minSdkVersion to local.properties(‘flutter.minSdkVersion’).toInterger() just like in the image below:

Then you run flutter clean, followed by flutter pub get.

Then you run flutter clean, followed by flutter pub get.

For the full working code referenced in this article, you can check the Github repo:

There are other functions such as changing locale amongst others, in the speech_to_text package that I didn't cover in this article.

For observations, or questions you can leave a comment.